Apache Spark - Try it using docker!

INTRODUCTION

Apache Spark is an opensource tool for data analysis and transformation. It uses RDD (resilient distributed dataset) a read only dataset distributed over a cluster of machine that is mantained is a fault tolerant way.

It obtain high performance for batch and streaming data. It uses:

- DAG scheduler

- Query Optimizer

- A Physical Execution Engine.

Docker is a tool to create an isolated system into a host computer

I use docker every day to create and simulate more scenario. Today i will explain how to create a master and worker apache spark node.

docker-compose is a tool to create an environment consist of a set of container

docker-spark is big-data-europe's github repository. We can create an environment using docker-compose.yaml file:

version: "3.3"

services:

spark-master:

image: bde2020/spark-master:2.4.0-hadoop2.7

container_name: spark-master

ports:

- "8080:8080"

- "7077:7077"

environment:

- INIT_DAEMON_STEP=setup_spark

spark-worker-1:

image: bde2020/spark-worker:2.4.0-hadoop2.7

container_name: spark-worker-1

depends_on:

- spark-master

ports:

- "8081:8080"

environment:

- "SPARK_MASTER=spark://spark-master:7077"

spark-worker-2:

image: bde2020/spark-worker:2.4.0-hadoop2.7

container_name: spark-worker-2

depends_on:

- spark-master

ports:

- "8082:8080"

environment:

- "SPARK_MASTER=spark://spark-master:7077"

services:

spark-master:

image: bde2020/spark-master:2.4.0-hadoop2.7

container_name: spark-master

ports:

- "8080:8080"

- "7077:7077"

environment:

- INIT_DAEMON_STEP=setup_spark

spark-worker-1:

image: bde2020/spark-worker:2.4.0-hadoop2.7

container_name: spark-worker-1

depends_on:

- spark-master

ports:

- "8081:8080"

environment:

- "SPARK_MASTER=spark://spark-master:7077"

spark-worker-2:

image: bde2020/spark-worker:2.4.0-hadoop2.7

container_name: spark-worker-2

depends_on:

- spark-master

ports:

- "8082:8080"

environment:

- "SPARK_MASTER=spark://spark-master:7077"

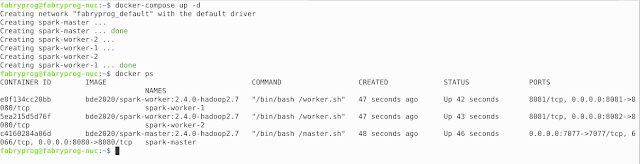

Now, we can create a cluster into our machine using the command:

Now my spark cluster is running!

A LITTLE DEEPER

Apache Spark runs on Master-Slave architecture, into our cluster there are:

- one master node

- this node exposes WebUI (see below) and manage the jobs into workers

- two workers

RUN AN EXAMPLE

Now we are able to send a new job to the spark cluster or open a spark-shell linked to our cluster (see below):

WARNING

java 8 version is not able to retrieve right resource informations, read this post to fix it (external resource)PRODUCTION?

This guide talks about a local cluster uses for test or develop purpose. But, i am sure, you are asking: "Can i use this configurations into real production cluster?"

Docker has a cluster manager called swarm to link different machine. It is stable and easy to use!!!

YES: docker swarm and apache spark can be used into a real production cluster!

Good bye and have fun with Apache Spark!

Nice and good article. It is very useful for me to learn and understand easily. Thanks for sharing your valuable information and time. Please keep updating big data online training

RispondiEliminaThanks for sharing your innovative ideas to our vision. I have read your blog and I gathered some new information through your blog. Your blog is really very informative and unique. Keep posting like this. Awaiting for your further update.If you are looking for any apache spark scala related information, please visit our website Apache spark training institute in Bangalore

RispondiEliminaThanks for sharing useful information. I learned something new from your bog. Its very interesting and informative. keep updating. If you are looking for any apache spark scala related information, please visit our website Apache spark training institute in Bangalore

RispondiEliminaWow Very Nice Post I really like This Post. Please share more post.

RispondiEliminaApache Spark Training Institutes in Pune

Good Post! Thank you so much for sharing this pretty post,

RispondiEliminaOracle Training in Chennai | Certification | Online Training Course | Oracle Training in Bangalore | Certification | Online Training Course | Oracle Training in Hyderabad | Certification | Online Training Course | Oracle Training in Online | Oracle Certification Online Training Course | Hadoop Training in Chennai | Certification | Big Data Online Training Course

Very nice post.thank you so much for sharing your knowledge with us.

RispondiEliminakeep updating more Concepts.

big data hadoop course

Docker and Kubernetes is next generation platform

RispondiEliminaApache spark now a days hot cake and huge demand in the market. In future combination of these two create wonders in software industry.

Thanks to share ur knowledge

Regards

Venu

bigdata training institute in Hyderabad

spark training in Hyderabad

th the best 1-click approach, users can easily clean up leftovers, registry entries even privacy traces and junk files accumulated over the .Advanced System Repair Pro License Key Crack

RispondiEliminaMicrosoft Office brings you Word, Excel, and PowerPoint all in one app. Take advantage of a seamless experience with Microsoft tools on the go with the .Microsoft Office Download

RispondiEliminaElevate your online presence with powerful dedicated servers Chicago. Experience unmatched performance and reliability for your business needs.

RispondiEliminaEscape to a luxurious resort in jaipur, where royal elegance meets modern comfort. Enjoy world-class amenities, serene landscapes, and unforgettable experiences.

RispondiElimina